A new team without any experience finds the adoption of the Scrum Framework seductive. They observe that others have achieved success with agile, and so, why not us?

Sounds good!

They pick up boilerplate like The Scrum Guide, introduce electronic card walls, and start speaking jargon like "story points," "backlog grooming," "prioritization," "sprints," and "velocity."

When they reveal this to leadership, there is enthusiasm and buy-in. They are heralded as an agile example for others to follow.

Upon closer scrutiny, there are 30 cards in the "doing" column, assigned to various combinations of 5 or 6 people.

But two of those people are out at a customer site, and another is on vacation.

Some cards in "doing" have not been updated in months.

To complete any one card takes many-many weeks. Whenever the board is occasionally inspected, people are assigned, un-assigned and re-assigned without notice. Due dates are pushed further out into the future.

There is no indication on the card for whom the work is done, or who has a stake in the outcome.

There is a marriage of those who created the card wall with the wall itself. For everyone else, there is a lack of understanding of the cards on the wall and their purpose.

When good-faith changes are suggested to promote clarity, controversy erupts and change is dismissed out-of-hand.

"Why do we call it backlog when what we mean is committed?"

"Because everyone else calls it backlog."

"We cannot possibly be working on all the cards in the 'doing' column."

"Yes we are."

I conclude this parable with a question: what do you think will be the reaction of leadership when they see this card wall at the next quarterly meeting, and it's impossible to see that any of the cards moved, or that any work got done at all?

Evolving Toward Agile

Musings on the acquisiton of agile expertise.

Wednesday, July 5, 2017

Saturday, July 1, 2017

On Thomas Paine and the Dunning Kruger Effect (Part I)

A couple of recent experiences have led to the following sequence of posts. This one relates the first.

It was in my role as the engineering manager of a team of Extreme Programming [XP] bootcampers, none of whom had more than a year of practice.

Our first recruit for our tech lead role was an agilist I knew from a prior shop. He came to the table with more than 20 years of practice, versed on all the current platforms (web and mobile), and more than 10-years of experience in XP. He was a little rusty from a recent hiatus.

We governed by consensus, and when we convened at the end of the day to decide, the vote was not to offer him tech lead, but at the entry level, my vociferous opposition notwithstanding.

Of course, the candidate, knowing his salt, declined our offer and joined a well-known XP boutique.

Some months later, after my team had gained some practice, I heard from more than one of our members that they would have voted to hire that candidate had they known then what they came to know.

Why could my team not see at the time what was so evident to me?

Now, the experience with this team was rewarding in light of how much we learned. When our team went our separate ways, everybody upped their game:

It was in my role as the engineering manager of a team of Extreme Programming [XP] bootcampers, none of whom had more than a year of practice.

Our first recruit for our tech lead role was an agilist I knew from a prior shop. He came to the table with more than 20 years of practice, versed on all the current platforms (web and mobile), and more than 10-years of experience in XP. He was a little rusty from a recent hiatus.

We governed by consensus, and when we convened at the end of the day to decide, the vote was not to offer him tech lead, but at the entry level, my vociferous opposition notwithstanding.

Of course, the candidate, knowing his salt, declined our offer and joined a well-known XP boutique.

Some months later, after my team had gained some practice, I heard from more than one of our members that they would have voted to hire that candidate had they known then what they came to know.

Why could my team not see at the time what was so evident to me?

Now, the experience with this team was rewarding in light of how much we learned. When our team went our separate ways, everybody upped their game:

- we sent our youngest member onto his current role at Google;

- the defacto team lead went from no experience in the functional style to practicing backend Haskell;

- our HTML/CSS designer went on to practice node.js, mongoDB and Redis; and

- I recently had the privilege to recommend our newest member for his new role after his stint practicing hard-core Ops on docker/kubernetes.

But the question remained to this day and recurred again to me recently:

- what caused my team such clarity of hindsight about a decision with which they previously disagreed?

Monday, April 1, 2013

An Experience Report Building a Mobile Automated Test Lab With SOASTA TouchTest

Prelude

I came to develop a PhoneGap application on IOS and Android tablets. Agile/lean engineering practice led to test-first development, but when I began to build out continuous integration, I found the offerings for mobile automated functional testing and deployment lacked maturity.

I discovered SOASTA when I attended a webinar on mobile functional testing that culminated with a photo of a mobile lab, to which a continuous integration process was deploying and running an automated suite of functional tests to a variety of IOS and Android targets:

No further inspiration required.

Such an outcome would provide my organization with a high degree of code coverage for our mobile offerings and protection against defect regression -- to build the product right -- and establish an infrastructure to deploy feature changes continuously -- to build the right product. The doors to lean mobile platform development are now wide open for us.

SOASTA and CloudBees

The webinar was hosted by SOASTA in partnership with CloudBees, a cloud-based provider of Platform-as-a-Service for developing web-based and mobile applications. We followed the practices recommended by SOASTA and CloudBees closely, and built out our Jenkins deployment pipeline comprised of the following jobs:

(3) Again, we use SOASTA command-line tooling to run a pre-recorded mobile functional test on a SOASTA-hosted instance of their TouchTest environment.

I came to develop a PhoneGap application on IOS and Android tablets. Agile/lean engineering practice led to test-first development, but when I began to build out continuous integration, I found the offerings for mobile automated functional testing and deployment lacked maturity.

I discovered SOASTA when I attended a webinar on mobile functional testing that culminated with a photo of a mobile lab, to which a continuous integration process was deploying and running an automated suite of functional tests to a variety of IOS and Android targets:

No further inspiration required.

Such an outcome would provide my organization with a high degree of code coverage for our mobile offerings and protection against defect regression -- to build the product right -- and establish an infrastructure to deploy feature changes continuously -- to build the right product. The doors to lean mobile platform development are now wide open for us.

SOASTA and CloudBees

The webinar was hosted by SOASTA in partnership with CloudBees, a cloud-based provider of Platform-as-a-Service for developing web-based and mobile applications. We followed the practices recommended by SOASTA and CloudBees closely, and built out our Jenkins deployment pipeline comprised of the following jobs:

- build the javascript assets for deployment to the IOS PhoneGap project;

- build and deploy the IOS app capable of running SOASTA functional tests to devices tethered to the mobile lab;

- kick off SOASTA functional tests;

- build and deploy the production-ready IOS app to our enterprise Appaloosa store and notify QA that a new release is ready for a spot check.

We also built out an analogous pipeline to deploy and test our Android PhoneGap project designed to run concurrently with the IOS pipeline.

Features of Our Mobile Lab

(1) We run over 350 automated unit- and integration-tests and achieve greater than 80% code coverage:

(2) Using SOASTA command-line tooling and the Jenkins XCode plugin, we build a mobile-functional-test-ready IOS target and deploy it to the devices tethered to the mobile lab:

(3) Again, we use SOASTA command-line tooling to run a pre-recorded mobile functional test on a SOASTA-hosted instance of their TouchTest environment.

(4) Finally, provided each job succeeds, we build and deploy a production-ready release to our Appaloosa private enterprise store:

(5) There is a step that I purged, which uploaded to a QA site for approval. This meant that the build would not pass until QA exercised their exploratory test phase. I did not feel right about the way in which this step would prevent continuous deployment. But I felt that it was right to purge this step after discussing the topic with Joshua Kerievsky. He asked me a simple question: can the automated test do exactly what QA would do? Because of the accurate recording I experienced with SOASTA TouchTest, my answer was: yes.

Quick Starts and Support

(5) There is a step that I purged, which uploaded to a QA site for approval. This meant that the build would not pass until QA exercised their exploratory test phase. I did not feel right about the way in which this step would prevent continuous deployment. But I felt that it was right to purge this step after discussing the topic with Joshua Kerievsky. He asked me a simple question: can the automated test do exactly what QA would do? Because of the accurate recording I experienced with SOASTA TouchTest, my answer was: yes.

Quick Starts and Support

There exists a ton of practice on both SOASTA and CloudBees for getting a mobile lab up and running. SOASTA's quick starts and knowledge base are comprehensive, and I found the forums and personalized sales support via email extra-ordinarily responsive.

The same is true of CloudBees. Their partnerdemo site and blog provided concrete configuration and practice for many of the scenarios we required. And Mark Prichard of CloudBees himself generously devoted a considerable amount of his time to walk thru the fine points of my Jenkins configuration.

The other offerings I discovered that support mobile functional testing had obvious shortcomings in one-way-or-another. SOASTA is the only one, to my knowledge, that can support development at enterprise scale.

My favorite feature is to be able to deploy from the command line to IOS hardware targets in a continuous deployment scenario without manual intervention.

At the time of posting this, I learned that SOASTA has shipped my most-anticipated forthcoming feature: the one that wakes up the sleeping device tethered to the mobile lab when the automated build deploys.

Our experience with SOASTA tooling for automated mobile functional testing was quite satisfying.

Summary

The other offerings I discovered that support mobile functional testing had obvious shortcomings in one-way-or-another. SOASTA is the only one, to my knowledge, that can support development at enterprise scale.

My favorite feature is to be able to deploy from the command line to IOS hardware targets in a continuous deployment scenario without manual intervention.

At the time of posting this, I learned that SOASTA has shipped my most-anticipated forthcoming feature: the one that wakes up the sleeping device tethered to the mobile lab when the automated build deploys.

Our experience with SOASTA tooling for automated mobile functional testing was quite satisfying.

Tuesday, July 27, 2010

Initial Impressions on Ruby

I took occasion the last couple of weeks to introduce myself to Ruby. I had an exercise as impetus:

This code should be much more legible. Nevertheless, the facility that Ruby provides for easily operating on arrays and matrices reminds me a great deal of my first programming language -- APL -- which was designed with matrix mathematics in mind.

- given 3 .csv data files, each one of which is delimited differently,

- then produce a report of the data in 3 different sort orders.

The vast landscape of tutorials, books and other literature on Ruby notwithstanding, it was easy to pick The RSpec Book as my basic guide. It is the only book with an emphasis on both micro- and story-tests, and counts Dan North, David Chelimsky and Dave Astels as authors. (I am unsure who wouldn't want to follow those guys.) With that as my guide I would find expert directions on driving solutions from tests.

I also required a language reference that could boot me up as quickly as possible. This required a little more comparative analysis along the landscape of Ruby resources...along with some fitful starts and detours. I eventually settled on Design Patterns in Ruby -- because it had a short chapter on Ruby basics in preparation for the discussion of patterns, and because I could use the patterns themselves as examples of good Ruby usage -- and Rails for Java Developers -- because it helped me transfer my Java and C# competency.

What first impressed me was the speed of the interpreter. It had been many years since I worked with an interpreted language. Likewise, I remember the vast migration of developers from Microsoft Basic to Borland Delphi Pascal because the compiled code offered execution speeds that were a drastic improvement over interpreted Basic. I was thus pleasantly surprised to see my Cucumber and RSpec tests execute with speeds comparable to my JUnit tests. Now, I'm sure the caveat between the speed of compiled and interpreted languages remain, and that the Java compiler will remain appealing to developers for that reason. However, the speed of the interpreter distinguished Ruby in my mind.

Another impression regards the richness of the Ruby language. I test-drove a solution to the same problem in Java with 32 tests and 9 domain classes. My Ruby solution had 15 Cucumber scenarios (story tests) containing 51 steps and 23 RSpec examples (micro tests), so there were a lot of tests, however, I ended up with only 3 domain objects:

- one to accept the command line input;

- a report object to print the report; and

- a parser object to parse the input.

Moreover, the report object only had 3 methods, and the parser object only had 9. If I knew the language more intimately, then I'm sure the objects would've been even smaller.

What I mean by richness of the language is illustrated by my application's entry point:

Note how expressive are the guard clauses and the novel way in which Ruby allows to use the "if" conditionals after the clause. Note also the expressive way in which booleans are asked -- with a question mark.

def begin command_line

abort USAGE if command_line.empty?

abort FILE_NOT_FOUND if not command_line.all? {|file| File.exists?(file)}

record_sets = command_line.collect {|file| File.open(file).readlines}

parsers = record_sets.collect {|records| Parser.new(records)}

Report.new(standard_output, parsers).print

end

Note how expressive are the guard clauses and the novel way in which Ruby allows to use the "if" conditionals after the clause. Note also the expressive way in which booleans are asked -- with a question mark.

But for me, since my background is in Mathematics, I was really taken by the way in which the syntax adopts parts of naive set representation. In mathematics, I can specify the set of all Integers between 1 and 10 as follows:

{∀ i ε I | 1 < i < 10 }

This reads:

for each i an element of the set of all Integers such that 1 < i < 10.I found myself reading enumerators on arrays and hashes in the same way:

zero_thru_ten = [] # initialize to empty array

zero_thru_five = [0, 1, 2, 3, 4, 5]

# for each element, i, append (2 * i) to array

zero_thru_five.each { | i | zero_thru_10 << i * 2 }

zero_thru_ten # => [0, 2, 4, 6, 8, 10]As it is common in mathematics to refer to elements of sets by x or y, or elements of matrices by i and j, I found that my refactorings often renamed references from those terms. For example, here's a refactoring that I missed:

def parse_input

parsed = elements.collect { |i| columns.collect { |j| i[j] } }

parsed.collect { |i| date(i); gender(i); i }

end

This code should be much more legible. Nevertheless, the facility that Ruby provides for easily operating on arrays and matrices reminds me a great deal of my first programming language -- APL -- which was designed with matrix mathematics in mind.

(More to follow.)

Friday, June 25, 2010

Industrial Logic

It is difficult to learn agile engineering practices on your own. No matter how many books you read or how many chances you take with production code, there is always a risk that you will end up in the weeds trying to slash your way out. That's because the principles and practices inherent in agile software engineering require an apprenticeship with a master who has failed at all those practices for many...many years, and can guide you so that you can avoid the pitfalls. This is not unlike other crafts -- most notably, carpentry and martial arts -- in which expert guidance is imperative.

So there is a path toward software engineering agility for individuals upon which I can embark. My new goal is to spend the next 6-12 months following the entire Industrial Logic eLearning curriculum in much more depth than I was here-to-fore able, so that I can advance to that next level.

But what of the aspiring agile software engineer, such as myself, who's inspired by the mastery of those at the vanguard of the movement toward agile? One cannot afford the price of a coach unless you are independently wealthy. And with the current state of agile adoption in the corporate enterprise at only 20% [Martin Fowler, ThoughtWorks Lunch-and-Learn, Chicago, circa late 2009], it is fortuitous if you gain coaching from a master during the normal course of your employment.

There is an approach that I can, with confidence, highly recommend: eLearning at Industrial Logic. The reason why I can be so confident is that I could not have won my first agile job without that curriculum.

I had no prior agile experience before I was hired as an agile developer/tester. But when I did my code review during the interview process, the reviewer was impressed with my application of test-driven development, refactoring, mocking and patterns that was evident in my code. These are practices that I refined while working on Industrial Logic's courseware.

What makes the courseware most effective, and what distinguishes Industrial Logic from other Agile Training, Transitioning and Consulting Organizations, is the level of expertise of their team -- most of whom are luminaries in the agile community -- and the fact that their courseware is designed for them to provide you near-realtime feedback as you work through the exercises.

These exercises require you to think through problems. Many require you to write code to implement refactorings or patterns, or to test-drive toward solutions to problems that come from their real-world practice. And if there is something that you don't understand about your answer, then Joshua Kerievsky or Bill Wake or Brian Foote or Naresh Jain or someone like that will respond to your question by the next day. I cannot think of a better way to get that kind of intimate coaching at such a high level and at a price that is affordable to individuals like myself. They, on the other hand, have even found a way to improve upon that.

They recently added a new pricing model to make the curriculum even more affordable to individuals. But the most exciting prospect is their Early Access Program for their Sessions Album: Sessions EAP. I think this innovation, in lieu of an expert coach, will help me toward my goal of agile mastery.

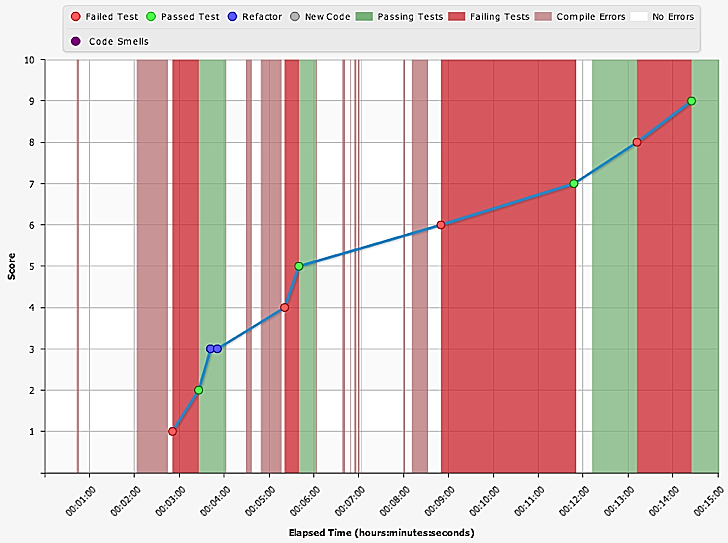

The concept is simple. Install their plugin into your Eclipse IDE. When you press record and start test driving, the plugin will accumulate data in the background. When you stop recording and upload your session data, you can obtain graphics on the quality of your test driving:

They recently added a new pricing model to make the curriculum even more affordable to individuals. But the most exciting prospect is their Early Access Program for their Sessions Album: Sessions EAP. I think this innovation, in lieu of an expert coach, will help me toward my goal of agile mastery.

The concept is simple. Install their plugin into your Eclipse IDE. When you press record and start test driving, the plugin will accumulate data in the background. When you stop recording and upload your session data, you can obtain graphics on the quality of your test driving:

- how many refactorings did you apply?

- what kinds of refactorings?

- what kinds of code smells did you address?

- how long did you stay in the following cycles --

- red?

- green?

- refactor?

- etc.

and this:

Subscribe to:

Comments (Atom)